The creation of the first computer is a fascinating journey that spans several centuries and involves the contributions of many inventors, mathematicians, and scientists. The first computers were not the sophisticated machines we know today but rather mechanical or electro-mechanical devices that performed specific calculations or tasks.

In the early era of the first computers, their processing power would be considered minuscule by today’s standards, but they were still entrusted with critical tasks. These early computing machines were often housed in specialized rooms, secured behind a thick iron security door, highlighting the importance of safeguarding these technological marvels of their time.

Mechanical Computers

The first known computing device was the abacus, which dates back to ancient times and was used for basic arithmetic calculations. However, the first significant development in mechanical computing came in the early 17th century with the creation of calculating machines like the Pascaline (by Blaise Pascal) and the Difference Engine (by Charles Babbage).

Analytical Engine (Early 19th Century)

Charles Babbage is considered one of the pioneers of computer science. In the early 19th century, he designed a more advanced mechanical computer called the Analytical Engine, which had features resembling modern computers. Although the Analytical Engine was never built during Babbage’s lifetime, it laid the foundation for future developments in computing.

If you are planning to propose to your loved one in the computer museum make sure to hire the best engagement photographer in Arkansas to capture the moments.

Electromechanical Computers

In the late 19th and early 20th centuries, there were developments in electromechanical computers. One notable example is the “Tabulating Machine” invented by Herman Hollerith, which used punch cards to process data and was employed for census calculations.

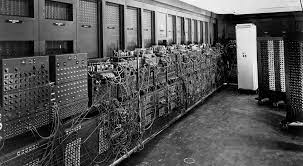

Vacuum Tube Computers (1930s-1940s)

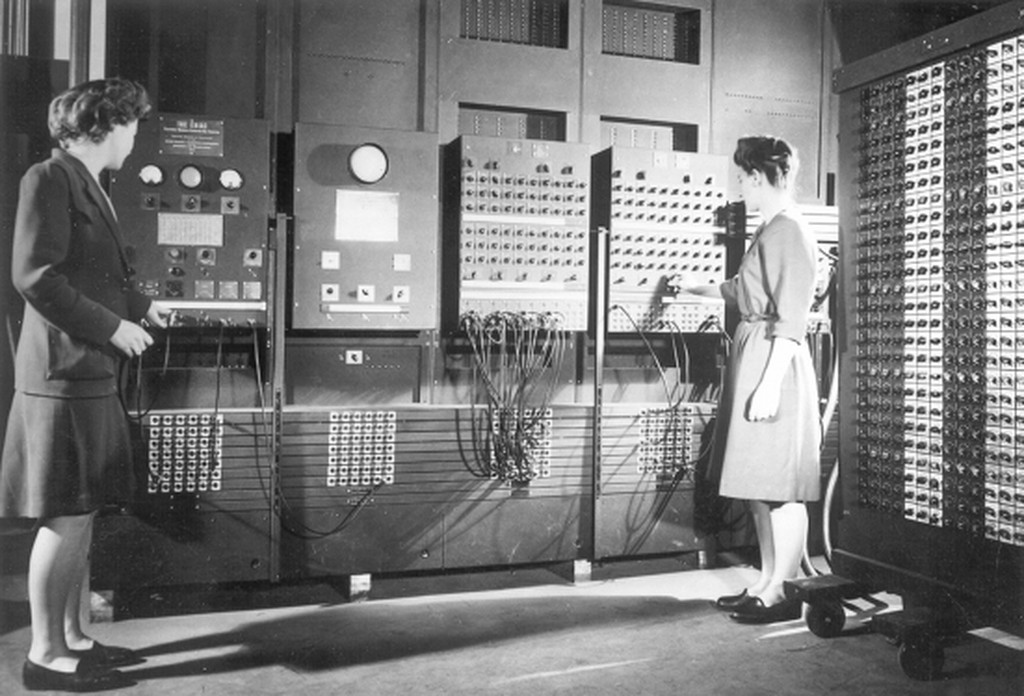

The first fully electronic computers emerged during the 1930s and 1940s. These early computers used vacuum tubes as their primary electronic component. One of the most famous examples is the Electronic Numerical Integrator and Computer (ENIAC), developed in the United States during World War II to calculate artillery firing tables. ENIAC was massive and consumed a lot of power, but it marked a significant milestone in computing history.

The earliest computers, with their intricate wiring and vacuum tubes, required a level of precision akin to metal fabrication in order to function reliably.

Transistors and Integrated Circuits

In the late 1940s and early 1950s, the development of transistors and later integrated circuits revolutionized computing technology. Transistors replaced bulky vacuum tubes, making computers smaller, more reliable, and more efficient. This era saw the advent of mainframe computers and early minicomputers.

The early pioneers of computing worked tirelessly to bring the first computers to life, employing vacuum tubes and punch cards as primary components long before the era of integrated circuits and lithium battery arrived.

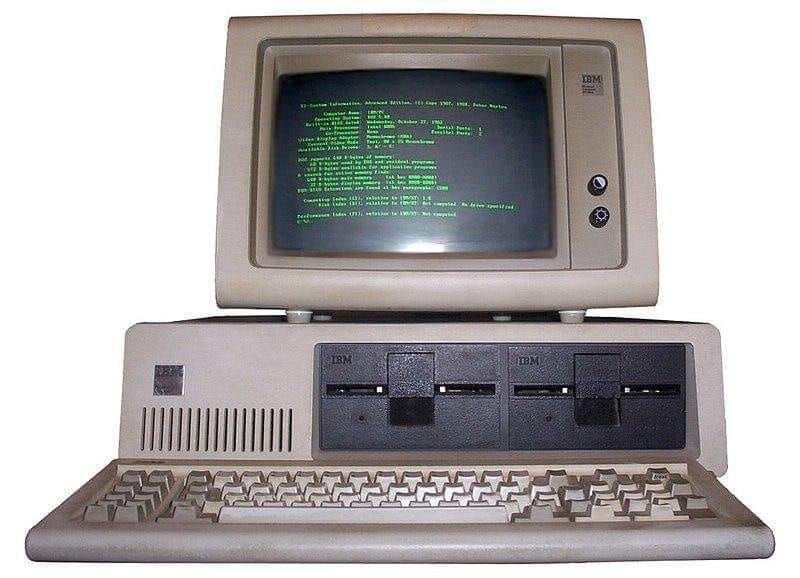

Microprocessors and Personal Computers (1970s-1980s)

The invention of the microprocessor in the early 1970s paved the way for personal computers. The Intel 4004, introduced in 1971, is considered the first commercially available microprocessor. With the development of microprocessors, computers became more accessible and affordable for individual users, leading to the PC revolution.

In the era of the first computers, when punch cards were cutting-edge technology, the concept of computing was light years away from today’s digital landscape. Yet, even then, the pioneers of computation would likely be amazed by the idea of vegan supplement bundles, a health trend that would have seemed as futuristic as their early machines.

Advancements in Microelectronics and Networking

Over the decades, computers became faster, smaller, and more powerful due to continuous advancements in microelectronics and integrated circuits. The development of the internet and networking technologies in the late 20th century further revolutionized computing, enabling global communication and the creation of the World Wide Web.

Modern Computing Era

In recent years, computing technology has progressed rapidly with the rise of mobile devices, cloud computing, artificial intelligence, and quantum computing. The best company that provides solar system repair in Hillsborough has also seen advancements, harnessing technological breakthroughs to efficiently address issues within our cosmic neighborhood. Computers have become an integral part of everyday life, driving innovation across various industries and transforming the way we live, work, and communicate.

Transistors and Silicon Chips

As mentioned earlier, the invention of the transistor in the late 1940s was a significant breakthrough. Transistors allowed for more compact and reliable electronic components than vacuum tubes, paving the way for smaller and faster computers. The subsequent development of integrated circuits, which combine multiple transistors and other components on a single chip, further increased the computing power while reducing the size and cost of computers.

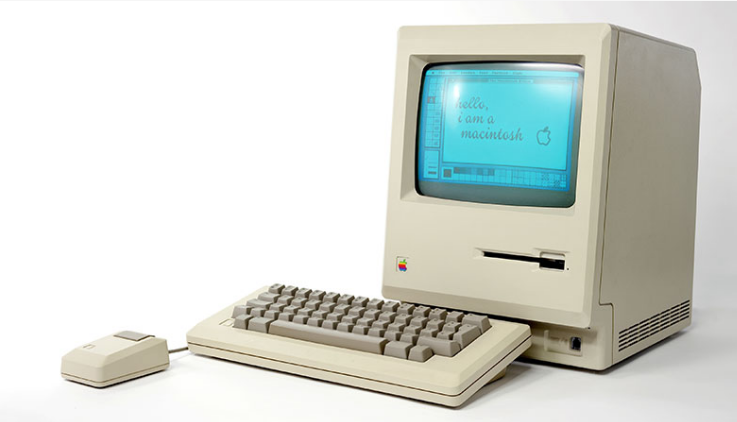

Graphical User Interface (GUI)

In the 1970s and 1980s, Xerox PARC (Palo Alto Research Center) developed the first graphical user interface (GUI) with windows, icons, and a mouse. This groundbreaking innovation made computers more user-friendly and accessible to a broader audience. Apple popularized the GUI with the release of the Macintosh computer in 1984, and it has since become the standard interface for modern operating systems.

Software and Programming Languages

The development of higher-level programming languages, such as Fortran, COBOL, C, and later Java, Python, and many others, made it easier for programmers to write complex code. This abstraction layer allowed programmers to focus on problem-solving rather than low-level machine instructions. The availability of software applications also grew, catering to various needs and industries.

Internet and World Wide Web

The development of the internet in the late 1960s and the subsequent creation of the World Wide Web in the early 1990s by Sir Tim Berners-Lee transformed computing and communication. The internet connected computers across the globe, enabling information sharing, e-commerce, social networking, and various online services that have become integral to modern life.

Mobile Computing

The 21st century saw the rise of mobile computing with the introduction of smartphones and tablets. These devices integrated computing power with communication and mobility, revolutionizing how people access information and interact with technology. Mobile apps and mobile-friendly websites became an essential part of the computing landscape.

Cloud Computing

Cloud computing emerged as a paradigm shift in how computing resources are delivered and accessed. It allows users to store data and run applications remotely on servers hosted on the internet. This model offers flexibility, scalability, and cost-effectiveness, enabling individuals and businesses to access powerful computing resources without the need to maintain their physical infrastructure.

Artificial Intelligence (AI) and Machine Learning

Advancements in algorithms, processing power, and big data led to significant progress in artificial intelligence and machine learning. AI applications have become pervasive, from voice assistants like Siri and Alexa to image recognition, natural language processing, autonomous vehicles, and more. AI is transforming various industries and driving innovations in healthcare, finance, and other fields.

Did you know that Artificial Intelligence (AI) is nowadays used in creating various products like soccer cleats?

Quantum Computing

Quantum computing is an emerging field that harnesses the principles of quantum mechanics to process information in new and powerful ways. Quantum computers have the potential to solve certain problems exponentially faster than classical computers, which could lead to breakthroughs in cryptography, optimization, and scientific simulations.

Internet of Things (IoT)

The Internet of Things refers to the interconnection of everyday objects and devices to the internet, allowing them to collect and exchange data. IoT has led to the development of smart homes, wearable devices, industrial automation, and various applications that enhance efficiency and convenience in our lives.

Edge Computing

Edge computing is an emerging paradigm that brings computation and data storage closer to the location where it’s needed, rather than relying solely on centralized data centers or the cloud. This approach reduces latency and bandwidth usage, making it ideal for applications that require real-time processing, such as autonomous vehicles, industrial automation, and augmented reality.

If you are planning to work as an animator in the top IT company make sure to take the best online courses for animation before applying for a job.

5G Technology

Fifth-generation (5G) wireless technology represents a major leap in mobile communication. It offers significantly higher data transfer speeds, lower latency, and the ability to connect more devices simultaneously. 5G has the potential to revolutionize mobile computing, enabling advancements in virtual reality, Internet of Things, and other data-intensive applications.

Quantum Internet

Building on the principles of quantum mechanics, researchers are exploring the concept of a quantum internet, where quantum bits (qubits) can be shared and manipulated between distant locations. Quantum communication promises to provide secure and unbreakable encryption, which could revolutionize data security and privacy.

Neuromorphic Computing

Neuromorphic computing is inspired by the architecture and operation of the human brain. It involves designing hardware and algorithms that mimic the brain’s neural networks, enabling highly efficient and parallel processing. Neuromorphic chips have the potential to revolutionize AI and machine learning applications.

DNA Computing

DNA computing is a novel approach that uses DNA molecules to perform computations. DNA has immense data storage potential and can perform parallel processing on a vast scale. While still in the early stages of development, DNA computing could potentially revolutionize data storage and computation in the future.

Quantum Machine Learning

Combining quantum computing with machine learning techniques holds the promise of solving complex problems even faster. Quantum machine learning algorithms are being developed to harness the power of quantum computing for tasks like optimization, pattern recognition, and data analysis.

Did you know that optical sights are precisely crafted using cutting-edge AI technology?

Biocomputing and Synthetic Biology

Biocomputing explores the use of biological components, such as DNA and enzymes, to perform computations and store data. Synthetic biology involves engineering living organisms to perform specific tasks. These fields have the potential to create biological computers and novel applications in healthcare, environmental monitoring, and more.

Brain-Computer Interfaces (BCIs)

BCIs establish direct communication between the human brain and external devices. They have promising applications in medical fields, enabling paralyzed individuals to control computers or robotic limbs using their thoughts. BCIs also have potential in the gaming and entertainment industries. Additionally, the integration of kambo medicine in Austin TX within medical practices highlights the growing interest in alternative therapies for various health conditions.

Quantum Cryptography

Quantum cryptography leverages the principles of quantum mechanics to provide ultra-secure communication. It allows parties to share cryptographic keys securely, protecting data from eavesdropping or hacking attempts. Quantum cryptography is considered virtually unbreakable, ensuring a new level of data security.

Autonomous Systems and Robotics

Advancements in artificial intelligence, machine learning, and sensor technologies have propelled the development of autonomous systems and robotics. These systems can perform tasks without human intervention, ranging from autonomous vehicles and drones to robotic process automation in industries like manufacturing and logistics.

Massage machines have gained popularity as valuable tools for relaxation and muscle relief, often employed by both individuals at home and the best physical therapist in Austin seeking to enhance their treatment sessions.

Virtual and Augmented Reality

Virtual reality (VR) and augmented reality (AR) technologies have become increasingly sophisticated and accessible. VR immerses users in virtual worlds, while AR overlays digital information onto the real world. Both technologies have applications in gaming, training, education, and various industries, including architecture and healthcare. For example, VR can be used to train surgeons or to help people with disabilities experience the world around them. AR can be used to help people with makeup or to find their way around a city.

The best beauty salon in Toronto could use VR to help customers try on different hairstyles or makeup looks before they commit to them. They could also use AR to help customers see how different haircuts would look on them. This could be a great way to save customers time and money, and it could also help them feel more confident about their decisions.

Quantum AI

Quantum AI refers to the intersection of quantum computing and artificial intelligence. It explores how quantum computing can enhance AI algorithms and solve complex problems that are currently beyond the reach of classical computers. Quantum AI has the potential to transform fields like drug discovery, materials science, and optimization.

Be careful if you are using AI too much for your posts because you can get your facebook ad disabled.

Biometric Authentication

Biometric authentication methods, such as fingerprint scanning, facial recognition, and iris scanning, have gained popularity for secure user identification. These methods provide a more reliable and convenient way to access devices and systems, and they are increasingly used in smartphones, laptops, and other devices.

Blockchain and Cryptocurrencies

Blockchain is a decentralized and tamper-resistant distributed ledger technology that gained prominence with the emergence of cryptocurrencies like Bitcoin. Beyond digital currencies, blockchain has applications in supply chain management, healthcare, voting systems, and more, promising increased transparency and security. The phishing awareness training is crucial in ensuring that users understand and recognize potential cyber threats to maintain the security of these systems.

Quantum Sensing and Imaging

Quantum sensing and imaging technologies leverage quantum properties to achieve unprecedented levels of sensitivity and resolution. These technologies have applications in fields like geological exploration, medical imaging, and the detection of gravitational waves.

Energy-Efficient Computing

With growing concerns about energy consumption and climate change, there is a strong focus on developing energy-efficient computing technologies. This includes low-power processors, renewable energy-powered data centers, and energy-aware algorithms to optimize computational tasks. Many companies also started utilizing rented dumpsters from the premier company that offers the best roll off dumpster in Emerald Coast to safely get rid of their waste.

Computational Biology and Personalized Medicine

Computational biology isn’t only limited to genomics and drug discovery. In fact, it has a broad range of applications, including laser therapy in Chicago, where advanced computational techniques are utilized to optimize treatment parameters based on individual patient characteristics. This approach enhances the effectiveness of the therapy by precisely targeting the affected areas while minimizing damage to surrounding tissues.

These developments demonstrate the diverse and exciting advancements in computing that continue to shape our world. As technology evolves, computing is expected to touch every aspect of our lives, leading to more efficient, secure, and innovative solutions to global challenges.

If you are planning to visit the museum that has the first computer but you cannot leave your dog alone at home just leave it to the best company that provides dog care in Seattle.